---------------------------------------------------------------------------

ValueError Traceback (most recent call last)

Cell In[35], line 5

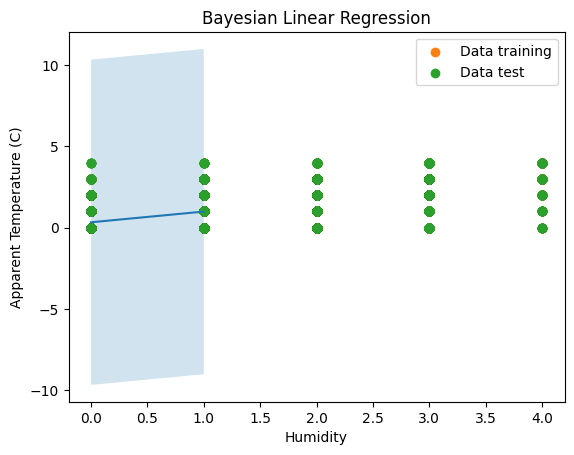

2 mu_pred_train, mN, SN = bayesian_poisson_regression(x, y, linear_basis, alpha=1.0, phi_est=2.0)

4 # Plot

----> 5 plot_bayesian_poisson(mN, SN, x, linear_basis, 2.0)

Cell In[33], line 4, in plot_bayesian_poisson(mN, SN, x, basis_function, phi_est)

2 mean, std = predict_bayesian_poisson(mN, SN, x, basis_function, phi_est)

3 plt.plot(x.flatten(), mean, label="Mean prediction")

----> 4 plt.fill_between(x.flatten(), mean - std, mean + std, alpha=0.3, label="Credible interval")

5 plt.title("Bayesian Poisson Regression (overdispersed)")

6 plt.legend()

File c:\Users\erik4\AppData\Local\Programs\Python\Python313\Lib\site-packages\matplotlib\pyplot.py:3340, in fill_between(x, y1, y2, where, interpolate, step, data, **kwargs)

3328 @_copy_docstring_and_deprecators(Axes.fill_between)

3329 def fill_between(

3330 x: ArrayLike,

(...) 3338 **kwargs,

3339 ) -> FillBetweenPolyCollection:

-> 3340 return gca().fill_between(

3341 x,

3342 y1,

3343 y2=y2,

3344 where=where,

3345 interpolate=interpolate,

3346 step=step,

3347 **({"data": data} if data is not None else {}),

3348 **kwargs,

3349 )

File c:\Users\erik4\AppData\Local\Programs\Python\Python313\Lib\site-packages\matplotlib\__init__.py:1521, in _preprocess_data.<locals>.inner(ax, data, *args, **kwargs)

1518 @functools.wraps(func)

1519 def inner(ax, *args, data=None, **kwargs):

1520 if data is None:

-> 1521 return func(

1522 ax,

1523 *map(cbook.sanitize_sequence, args),

1524 **{k: cbook.sanitize_sequence(v) for k, v in kwargs.items()})

1526 bound = new_sig.bind(ax, *args, **kwargs)

1527 auto_label = (bound.arguments.get(label_namer)

1528 or bound.kwargs.get(label_namer))

File c:\Users\erik4\AppData\Local\Programs\Python\Python313\Lib\site-packages\matplotlib\axes\_axes.py:5716, in Axes.fill_between(self, x, y1, y2, where, interpolate, step, **kwargs)

5714 def fill_between(self, x, y1, y2=0, where=None, interpolate=False,

5715 step=None, **kwargs):

-> 5716 return self._fill_between_x_or_y(

5717 "x", x, y1, y2,

5718 where=where, interpolate=interpolate, step=step, **kwargs)

File c:\Users\erik4\AppData\Local\Programs\Python\Python313\Lib\site-packages\matplotlib\axes\_axes.py:5701, in Axes._fill_between_x_or_y(self, ind_dir, ind, dep1, dep2, where, interpolate, step, **kwargs)

5696 kwargs["facecolor"] = self._get_patches_for_fill.get_next_color()

5698 ind, dep1, dep2 = self._fill_between_process_units(

5699 ind_dir, dep_dir, ind, dep1, dep2, **kwargs)

-> 5701 collection = mcoll.FillBetweenPolyCollection(

5702 ind_dir, ind, dep1, dep2,

5703 where=where, interpolate=interpolate, step=step, **kwargs)

5705 self.add_collection(collection)

5706 self._request_autoscale_view()

File c:\Users\erik4\AppData\Local\Programs\Python\Python313\Lib\site-packages\matplotlib\collections.py:1340, in FillBetweenPolyCollection.__init__(self, t_direction, t, f1, f2, where, interpolate, step, **kwargs)

1338 self._interpolate = interpolate

1339 self._step = step

-> 1340 verts = self._make_verts(t, f1, f2, where)

1341 super().__init__(verts, **kwargs)

File c:\Users\erik4\AppData\Local\Programs\Python\Python313\Lib\site-packages\matplotlib\collections.py:1404, in FillBetweenPolyCollection._make_verts(self, t, f1, f2, where)

1400 def _make_verts(self, t, f1, f2, where):

1401 """

1402 Make verts that can be forwarded to `.PolyCollection`.

1403 """

-> 1404 self._validate_shapes(self.t_direction, self._f_direction, t, f1, f2)

1406 where = self._get_data_mask(t, f1, f2, where)

1407 t, f1, f2 = np.broadcast_arrays(np.atleast_1d(t), f1, f2, subok=True)

File c:\Users\erik4\AppData\Local\Programs\Python\Python313\Lib\site-packages\matplotlib\collections.py:1442, in FillBetweenPolyCollection._validate_shapes(t_dir, f_dir, t, f1, f2)

1440 for name, array in zip(names, [t, f1, f2]):

1441 if array.ndim > 1:

-> 1442 raise ValueError(f"{name!r} is not 1-dimensional")

1443 if t.size > 1 and array.size > 1 and t.size != array.size:

1444 msg = "{!r} has size {}, but {!r} has an unequal size of {}".format(

1445 t_dir, t.size, name, array.size)

ValueError: 'y1' is not 1-dimensional